Aimyvoice TTS

Aimyvoice is a speech synthesis model from Just AI. The model is distinguished by:

- high processing speed

- a streaming mode for generating long texts

- support for SSML for tweaking pronunciation

- the ability to create derived voices with a preset pronunciation dictionary

Aimyvoice uses Caila as a hosting platform, and the model is available:

- as a standalone product: Aimyvoice Website

- also as a service in Caila Aimyvoice Model

Voice testing

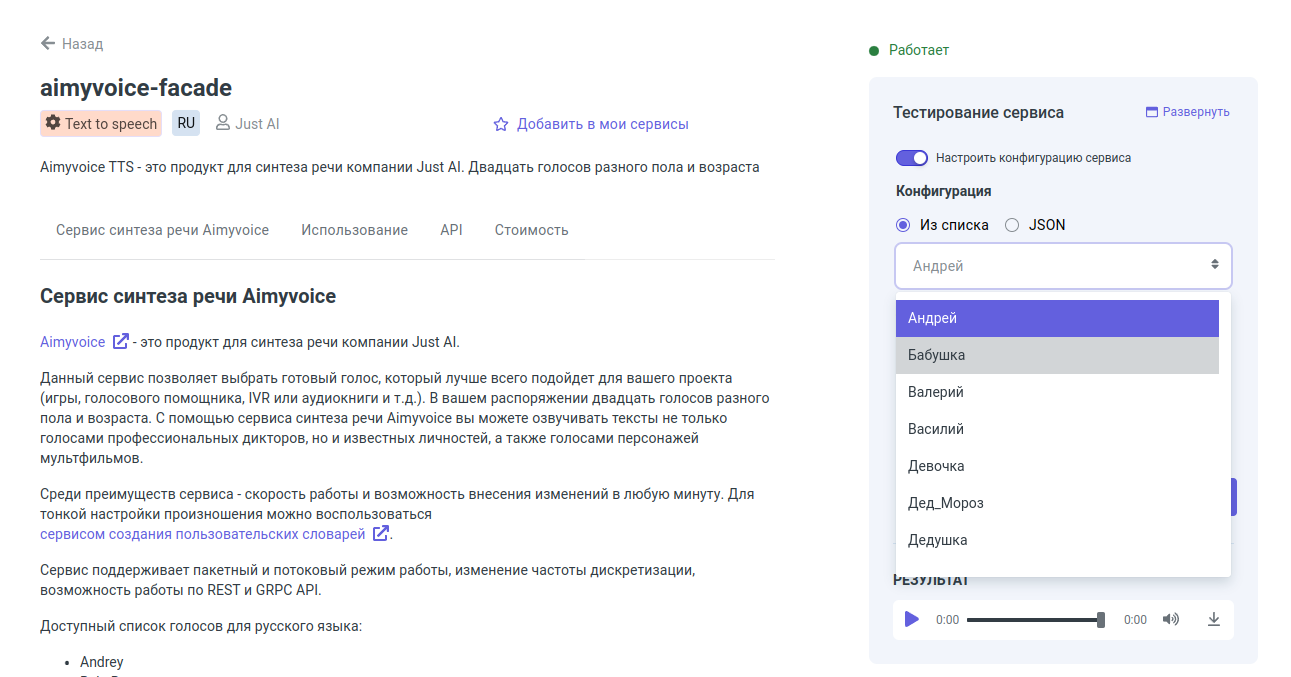

Voices can be tested on the service page.

You can select a voice from a list or set synthesis parameters in JSON format.

</p>

Connect using API

The speech synthesis service can be accessed in a standard way for all Caila services.

In addition, there are two specialised adapters for TTS services.

Caila API

HTTP TTS

POST https://app.caila.io/api/mlpgate/account/just-ai/model/aimyvoice-facade/tts. In this method, all parameters, including voice name and encoding parameters, must be passed in the request body. The response is returned asapplication/octet-streamin LINEAR16_PCM encoding (and not JSON).

Request example:

POST https://app.caila.io/api/mlpgate/account/just-ai/model/aimyvoice-facade/tts

{

"text": "Hello",

"voice": "Marry",

"outputAudioSpec": {

"audioEncoding": "LINEAR16_PCM",

"sampleRateHertz": 8000,

"chunkSizeKb": 1

}

}

GRPC processSynthesis

A method for performing synthesis via GRPC. This method accepts the same data as the previous two methods. The response returns a stream of chunks, each containing a small audio segment in PCM format.

rpc processSynthesis(ClientTtsRequestProto) returns (stream ClientTtsResponseProto)

To learn how to connect to Caila via GRPC, read this.

Pronunciation settings

Aimyvoice supports a rich set of markup for audio control.

Try synthesising this text:

<break time="0.8s" breath="1" breath_power="0.8" breath_dura="1.2"/>

My uncle <break time="1s"/> is of the most honest rules. <break time="250ms"/> When seriously unwell.

<break time="0.5s"/> He made others respect him. And you couldn’t think of anything better.

For more information on markup, see the original Aimyvoice documentation

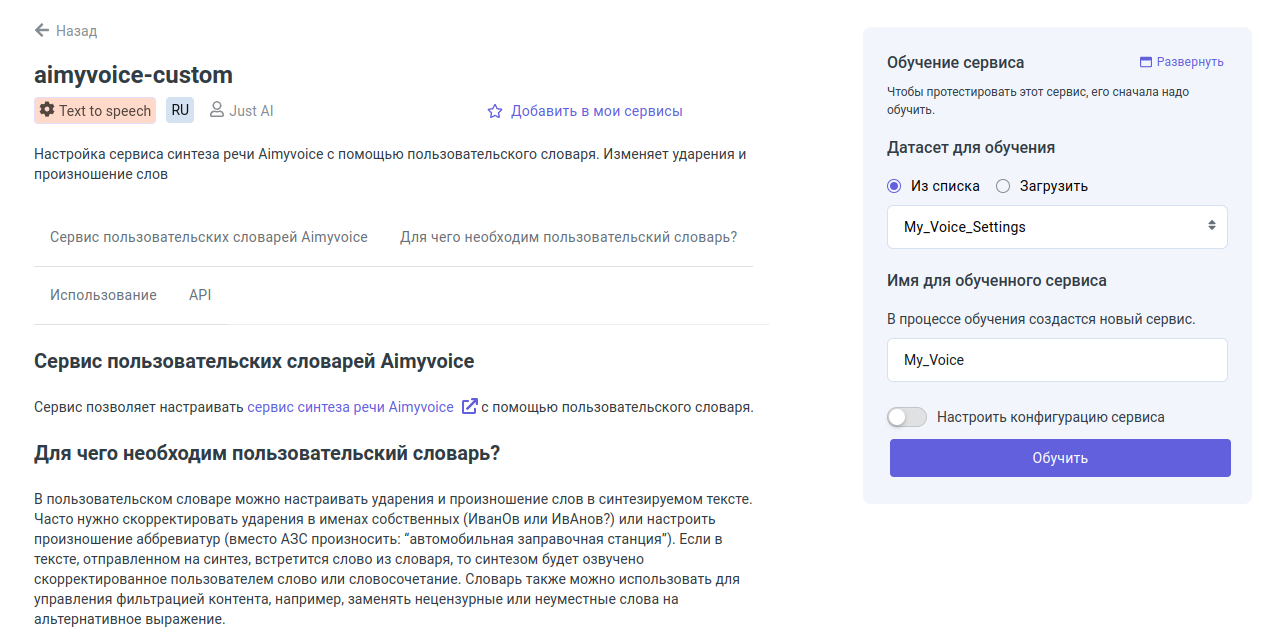

Creating derived voices

In Caila you can create a derived voice by configuring a pronunciation dictionary and some other voice parameters. Let’s look at how this can be done.

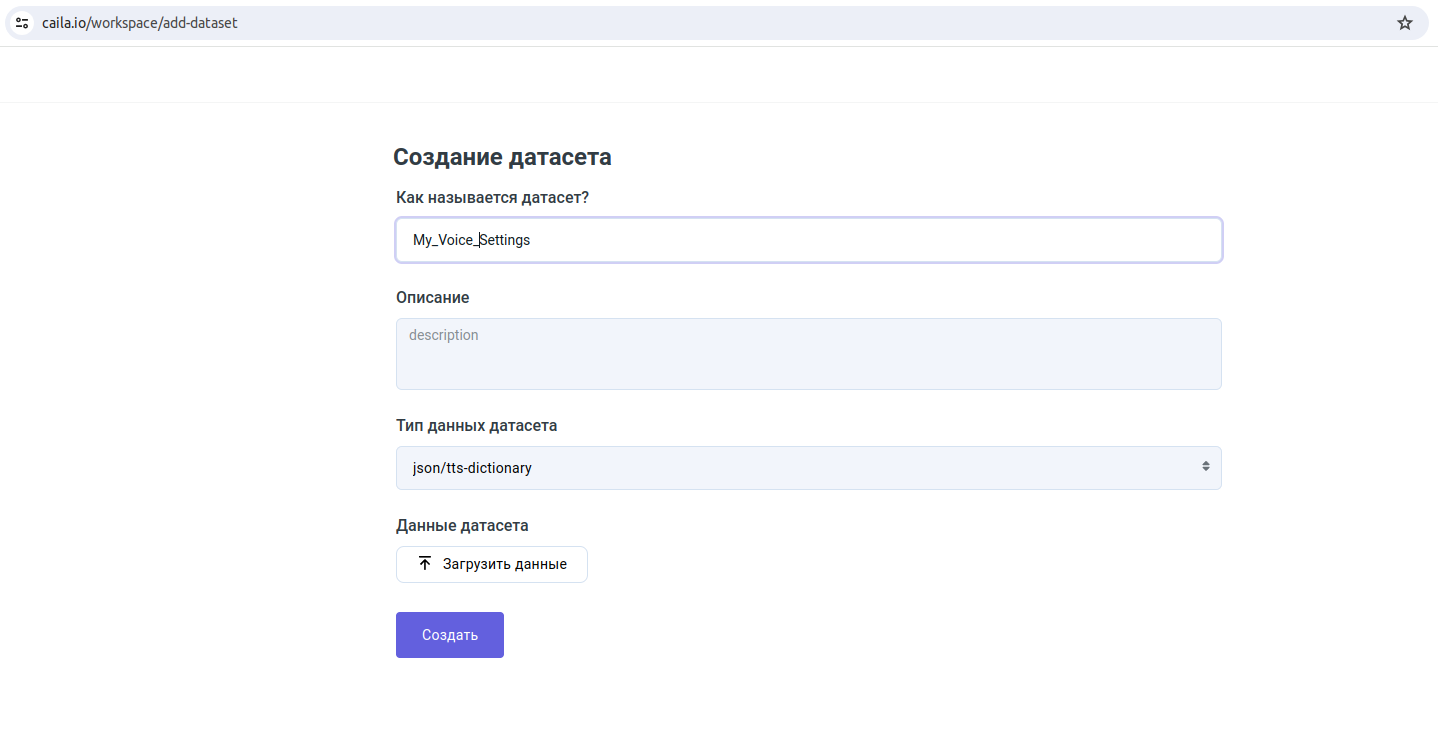

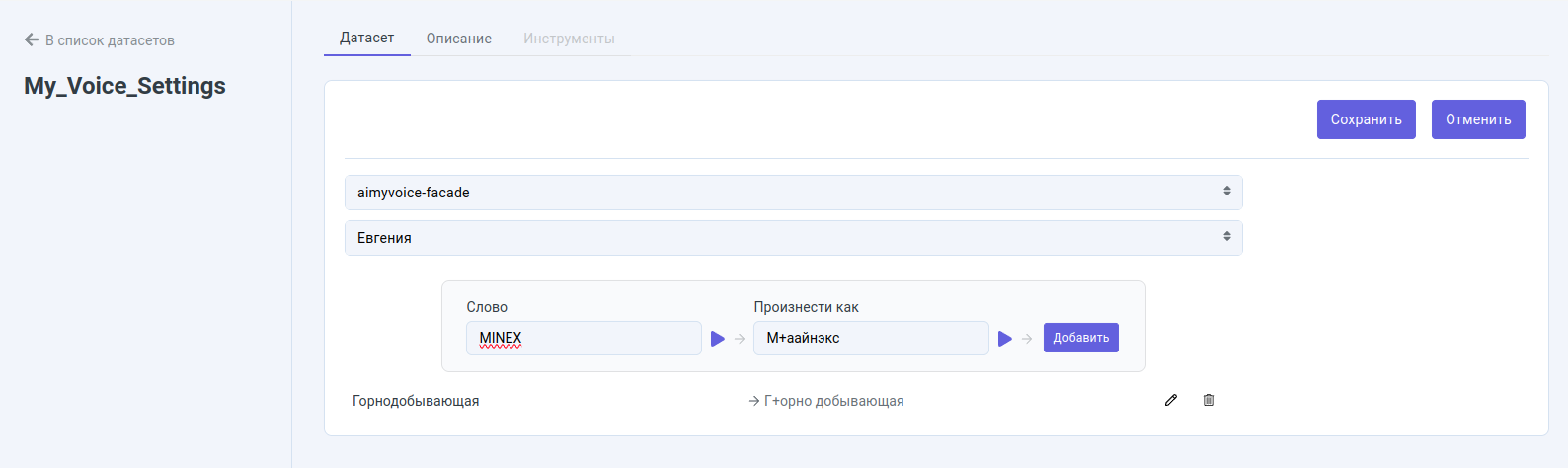

Create a dataset on the https://caila.io/workspace/dataset website with the json/tts-dictionary type.

Populate the dataset with rules.

Train the service on the https://caila.io/catalog/just-ai/aimyvoice-custom website with your rules.

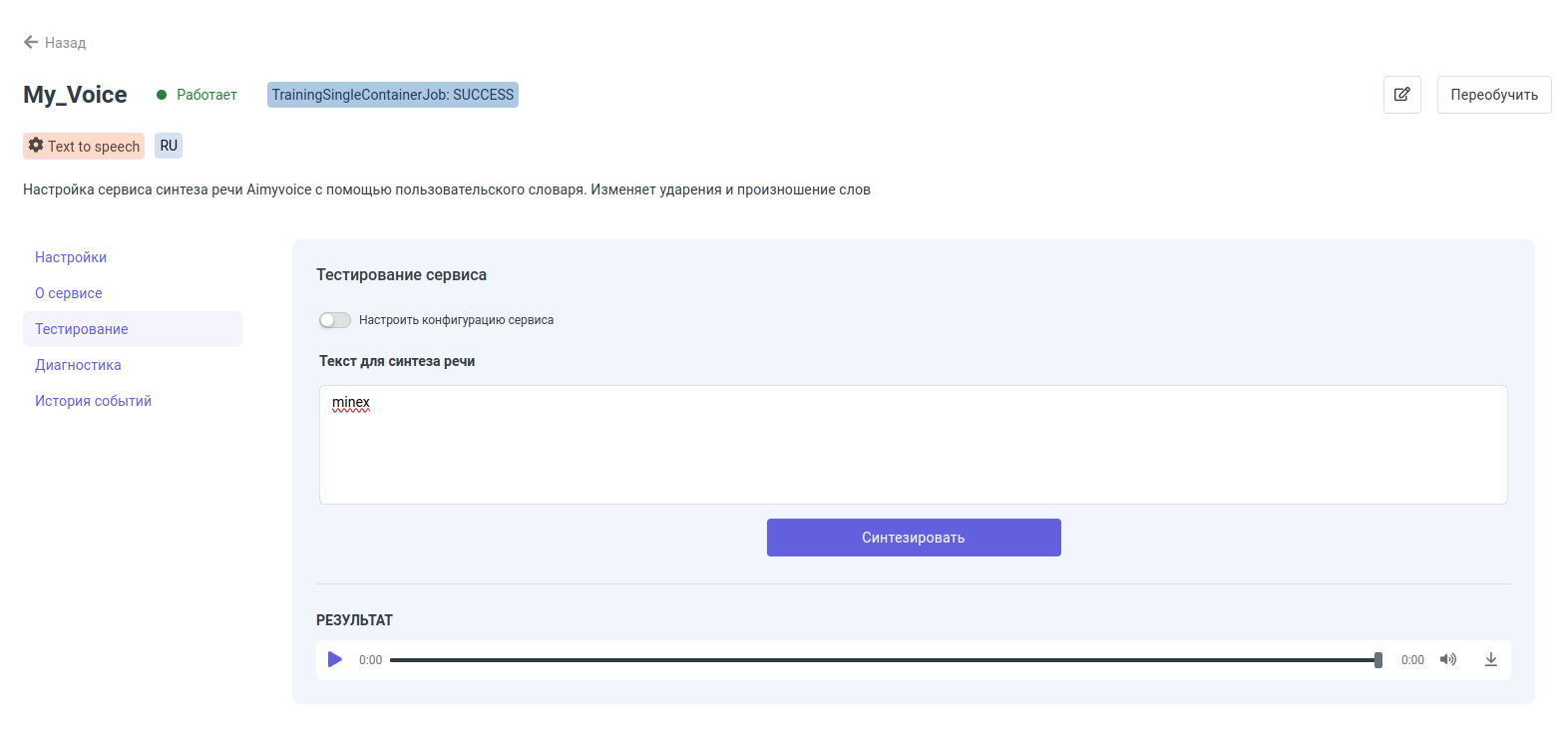

Test the resulting derived service in My space.

You can find the service on the Services/Fitted tab.

</p>